Contents

Introduction. xv

PART 1 DATABASE ADMINISTRATION

Chapter 1 SQL Server 2012 Editions and Engine Enhancements 3

SQL Server 2012 Enhancements for Database Administrators. 4

Availability Enhancements. 4

Scalability and Performance Enhancements. 6

Manageability Enhancements. 7

Security Enhancements. 10

Programmability Enhancements. 11

SQL Server 2012 Editions. 12

Enterprise Edition. 12

Standard Edition. 13

Business Intelligence Edition. 14

Specialized Editions. 15

SQL Server 2012 Licensing Overview. 15

Hardware and Software Requirements. 16

Installation, Upgrade, and Migration Strategies. 17

The In-Place Upgrade. 17

Side-by-Side Migration. 19

What do you think of this book? We want to hear from you!

Microsoft is interested in hearing your feedback so we can continually improve our books and learning

resources for you. To participate in a brief online survey, please visit:

microsoft.com/learning/booksurvey

viii Contents

Chapter 2 High-Availability and Disaster-Recovery

Enhancements 21

SQL Server AlwaysOn: A Flexible and Integrated Solution. 21

AlwaysOn Availability Groups . 23

Understanding Concepts and Terminology . 24

Configuring Availability Groups . 29

Monitoring Availability Groups with the Dashboard. 31

Active Secondaries. 32

Read-Only Access to Secondary Replicas . 33

Backups on Secondary . 33

AlwaysOn Failover Cluster Instances. 34

Support for Deploying SQL Server 2012 on Windows Server Core . 36

SQL Server 2012 Prerequisites for Server Core. 37

SQL Server Features Supported on Server Core. 38

SQL Server on Server Core Installation Alternatives . 38

Additional High-Availability and Disaster-Recovery Enhancements. 39

Support for Server Message Block . 39

Database Recovery Advisor. 39

Online Operations. 40

Rolling Upgrade and Patch Management. 40

Chapter 3 Performance and Scalability 41

Columnstore Index Overview. 41

Columnstore Index Fundamentals and Architecture. 42

How Is Data Stored When Using a Columnstore Index?. 42

How Do Columnstore Indexes Significantly Improve the

Speed of Queries? . 44

Columnstore Index Storage Organization. 45

Columnstore Index Support and SQL Server 2012. 46

Columnstore Index Restrictions. 46

Columnstore Index Design Considerations and Loading Data. 47

When to Build a Columnstore Index. 47

When Not to Build a Columnstore Index. 48

Loading New Data. 48

Beyond-Relational Example . 76

FILESTREAM Enhancements. 76

FileTable . 77

FileTable Prerequisites. 78

Creating a FileTable. 80

Managing FileTable. 81

Full-Text Search. 81

Statistical Semantic Search. 82

Configuring Semantic Search. 83

Semantic Search Examples. 85

Spatial Enhancements . 86

Spatial Data Scenarios . 86

Spatial Data Features Supported in SQL Server . 86

Spatial Type Improvements. 87

Additional Spatial Improvements. 89

Extended Events. 90

PART 2 BUSINESS INTELLIGENCE DEVELOPMENT

Chapter 6 Integration Services 93

Developer Experience . 93

Add New Project Dialog Box. 93

General Interface Changes. 95

Getting Started Window. 96

SSIS Toolbox. 97

Shared Connection Managers. 98

Scripting Engine. 99

Expression Indicators. 100

Undo and Redo . 100

Package Sort By Name. 100

Status Indicators. 101

Control Flow. 101

Expression Task. 101

Execute Package Task. 102

Data Flow . 103

Sources and Destinations. 103

Transformations. 106

Column References. 108

Collapsible Grouping. 109

Data Viewer. 110

Change Data Capture Support. 111

CDC Control Flow. 112

CDC Data Flow. 113

Flexible Package Design. 114

Variables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .115

Expressions. 115

Deployment Models. 116

Supported Deployment Models. 116

Project Deployment Model Features. 118

Project Deployment Workflow . 119

Parameters. 122

Project Parameters. 123

Package Parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .124

Parameter Usage. 124

Post-Deployment Parameter Values. 125

Integration Services Catalog. 128

Catalog Creation . 128

Catalog Properties. 129

Environment Objects. 132

Administration. 135

Validation. 135

Package Execution. 135

Logging and Troubleshooting Tools. 137

Security . 139

Package File Format. 139

Miscellaneous Changes. 197

SharePoint Integration . 197

Metadata. 197

Bulk Updates and Export . 197

Transactions . 198

Windows PowerShell. 198

Chapter 9 Analysis Services and PowerPivot 199

Analysis Services. 199

Server Modes. 199

Analysis Services Projects. 201

Tabular Modeling. 203

Multidimensional Model Storage. 215

Server Management . 215

Programmability. 217

PowerPivot for Excel. 218

Installation and Upgrade . 218

Usability. 218

Model Enhancements. 221

DAX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .222

PowerPivot for SharePoint. 224

Installation and Configuration. 224

Management. 224

Chapter 10 Reporting Services 229

New Renderers . 229

Excel 2010 Renderer . 229

Word 2010 Renderer. 230

SharePoint Shared Service Architecture. 230

Feature Support by SharePoint Edition. 230

Shared Service Architecture Benefits. 231

Service Application Configuration . 231

Power View. 232

Data Sources. 233

Power View Design Environment . 234

Data Visualization . 237

Sort Order. 241

Multiple Views . 241

Highlighted Values. 242

Filters. 243

Display Modes . 245

PowerPoint Export. 246

Data Alerts. 246

Data Alert Designer. 246

Alerting Service . 248

Data Alert Manager. 249

Alerting Configuration . 250

Index 251

What do you think of this book? We want to hear from you!

Microsoft is interested in hearing your feedback so we can continually improve our books and learning

resources for you. To participate in a brief online survey, please visit:

microsoft.com/learning/booksurvey

3

CHAP TE R 1

SQL Server 2012 Editions and

Engine Enhancements

SQL Server 2012 is Microsoft’s latest cloud-ready information platform. Organizations can use SQL

Server 2012 to efficiently protect, unlock, and scale the power of their data across the desktop,

mobile device, datacenter, and either a private or public cloud. Building on the success of the SQL

Server 2008 R2 release, SQL Server 2012 has made a strong impact on organizations worldwide with

its significant capabilities. It provides organizations with mission-critical performance and availability,

as well as the potential to unlock breakthrough insights with pervasive data discovery across the

organization. Finally, SQL Server 2012 delivers a variety of hybrid solutions you can choose from. For

example, an organization can develop and deploy applications and database solutions on traditional

nonvirtualized environments, on appliances, and in on-premises private clouds or off-premises public

clouds. Moreover, these solutions can easily integrate with one another, offering a fully integrated

hybrid solution. Figure 1-1 illustrates the Cloud Ready Information Platform ecosystem.

Hybrid IT

Traditional

Nonvirtualized

Private

Cloud

On-premises cloud

Public

Cloud

Off-premises cloud

Nonvirtualized

applications

Pooled (Virtualized)

Elastic

Self-service

Usage-based

Pooled (Virtualized)

Elastic

Self-service

Usage-based

Managed Services

FIGURE 1-1 SQL Server 2012, cloud-ready information platform

To prepare readers for SQL Server 2012, this chapter examines the new SQL Server 2012 features,

capabilities, and editions from a database administrator’s perspective. It also discusses SQL Server

2012 hardware and software requirements and installation strategies.

4 PART 1 Database Administration

SQL Server 2012 Enhancements for Database Administrators

Now more than ever, organizations require a trusted, cost-effective, and scalable database platform

that offers mission-critical confidence, breakthrough insights, and flexible cloud-based offerings.

These organizations face ever-changing business conditions in the global economy and challenges

such as IT budget constraints, the need to stay competitive by obtaining business insights, and

the ability to use the right information at the right time. In addition, organizations must always be

adjusting because new and important trends are regularly changing the way software is developed

and deployed. Some of these new trends include data explosion (enormous increases in data usage),

consumerization IT, big data (large data sets), and private and public cloud deployments.

Microsoft has made major investments in the SQL Server 2012 product as a whole; however, the

new features and breakthrough capabilities that should interest database administrators (DBAs) are

divided in the chapter into the following categories: Availability, Manageability, Programmability,

Scalability and Performance, and Security. The upcoming sections introduce some of the new features

and capabilities; however, other chapters in this book conduct a deeper explanation of the major

technology investments.

Availability Enhancements

A tremendous amount of high-availability enhancements were added to SQL Server 2012, which is

sure to increase both the confidence organizations have in their databases and the maximum uptime

for those databases. SQL Server 2012 continues to deliver database mirroring, log shipping, and replication.

However, it now also offers a new brand of technologies for achieving both high availability

and disaster recovery known as AlwaysOn. Let’s quickly review the new high-availability enhancement

AlwaysOn:

¦ ¦ AlwaysOn Availability Groups For DBAs, AlwaysOn Availability Groups is probably the

most highly anticipated feature related to the Database Engine for DBAs. This new capability

protects databases and allows for multiple databases to fail over as a single unit. Better data

redundancy and protection is achieved because the solution supports up to four secondary

replicas. Of these four secondary replicas, up to two secondaries can be configured as synchronous

secondaries to ensure the copies are up to date. The secondary replicas can reside

within a datacenter for achieving high availability within a site or across datacenters for disaster

recovery. In addition, AlwaysOn Availability Groups provide a higher return on investment

because hardware utilization is increased as the secondaries are active, readable, and can be

leveraged to offload backups, reporting, and ad hoc queries from the primary replica. The

solution is tightly integrated into SQL Server Management Studio, is straightforward to deploy,

and supports either shared storage or local storage.

Figure 1-2 illustrates an organization with a global presence achieving both high availability

and disaster recovery for mission-critical databases using AlwaysOn Availability Groups. In

addition,

the secondary replicas are being used to offload reporting and backups.

CHAPTER 1 SQL Server 2012 Editions and Engine Enhancements 5

70%

50%

25%

15%

70%

50%

25%

15%

Primary

Datacenter

Replica2

Replica3

Reports

Backups

Reports

Backups

Secondary

Datacenter

Replica4

Synchronous Data Movement

Asynchronous Data Movement

A

A

Secondary Replica

Primary Replica

A

A

A

A

Replica1

FIGURE 1-2 AlwaysOn Availability Groups for an organization with a global presence

¦ ¦ AlwaysOn Failover Cluster Instances (FCI) AlwaysOn Failover Cluster Instances provides

superior instance-level protection using Windows Server Failover Clustering and shared

storage.

However, with SQL Server 2012 there are a tremendous number of enhancements to

improve availability and reliability. First, FCI now provides support for multi-subnet failover

clusters. These subnets, where the FCI nodes reside, can be located in the same datacenter

or in geographically dispersed sites. Second, local storage can be leveraged for the TempDB

database.

Third, faster startup and recovery times are achieved after a failover transpires.

Finally, improved cluster health-detection policies can be leveraged, offering a stronger and

more flexible failover.

¦ ¦ Support for Windows Server Core Installing SQL Server 2012 on Windows Server Core

is now supported. Windows Server Core is a scaled-down edition of the Windows operating

system and requires approximately 50 to 60 percent fewer reboots when patching servers.

This translates to greater SQL Server uptime and increased security. Server Core deployment

options using Windows Server 2008 R2 SP1 and higher are required. Chapter 2, “High-Availability

and Disaster-Recovery Options,” discusses deploying SQL Server 2012 on Server

Core, including the features supported.

¦ ¦ Recovery Advisor A new visual timeline has been introduced in SQL Server Management

Studio to simplify the database restore process. As illustrated in Figure 1-3, the scroll bar

beneath

the timeline can be used to specify backups to restore a database to a point in time.

FIGURE 1-3 Recovery Advisor visual timeline

Note For detailed information about the AlwaysOn technologies and other high-availability

enhancements, be sure to read Chapter 2.

Scalability and Performance Enhancements

The SQL Server product group has made sizable investments in improving scalability and

performance

associated with the SQL Server Database Engine. Some of the main enhancements that

allow organizations to improve their SQL Server workloads include the following:

¦ ¦ Columnstore Indexes More and more organizations have a requirement to deliver

breakthrough

and predictable performance on large data sets to stay competitive. SQL

Server 2012 introduces a new in-memory, columnstore index built directly in the relational

engine. Together with advanced query-processing enhancements, these technologies provide

blazing-fast performance and improve queries associated with data warehouse workloads

by 10 to 100 times. In some cases, customers have experienced a 400 percent improvement

in performance. For more information on this new capability for data warehouse workloads,

review Chapter 3, “Blazing-Fast Query Performance with Columnstore Indexes.”

¦ ¦ Partition Support Increased To dramatically boost scalability and performance associated

with large tables and data warehouses, SQL Server 2012 now supports up to 15,000 partitions

per table by default. This is a significant increase from the previous version of SQL Server,

which was limited to 1000 partitions by default. This new expanded support also helps enable

large sliding-window scenarios for data warehouse maintenance.

¦ ¦ Online Index Create, Rebuild, and Drop Many organizations running mission-critical

workloads use online indexing to ensure their business environment does not experience

downtime during routine index maintenance. With SQL Server 2012, indexes containing

varchar(max), nvarchar(max), and varbinary(max) columns can now be created, rebuilt, and

dropped as an online operation. This is vital for organizations that require maximum uptime

and concurrent user activity during index operations.

¦ ¦ Achieve Maximum Scalability with Windows Server 2008 R2 Windows Server 2008 R2

is built to achieve unprecedented workload size, dynamic scalability, and across-the-board

availability and reliability. As a result, SQL Server 2012 can achieve maximum scalability when

running on Windows Server 2008 R2 because it supports up to 256 logical processors and

2 terabytes of memory in a single operating system instance.

Manageability Enhancements

SQL Server deployments are growing more numerous and more common in organizations. This fact

demands that all database administrators be prepared by having the appropriate tools to successfully

manage their SQL Server infrastructure. Recall that the previous releases of SQL Server included

many new features tailored toward manageability. For example, database administrators could easily

leverage Policy Based Management, Resource Governor, Data Collector, Data-tier applications, and

Utility Control Point. Note that the product group responsible for manageability never stopped

investing in manageability. With SQL Server 2012, they unveiled additional investments in SQL Server

tools and monitoring features. The following list articulates the manageability enhancements in SQL

Server 2012:

¦ ¦ SQL Server Management Studio With SQL Server 2012, IntelliSense and Transact-SQL

debugging

have been enhanced to bolster the development experience in SQL Server Management

Studio.

¦ ¦ IntelliSense Enhancements A completion list will now suggest string matches based on

partial words, whereas in the past it typically made recommendations based on the first character.

¦ ¦ A new Insert Snippet menu This new feature is illustrated in Figure 1-4. It offers developers

a categorized list of snippets to choose from to streamline code. The snippet picket tooltip can

be launched by pressing CTRL+K, pressing CTRL+X, or selecting it from the Edit menu.

¦ ¦ Transact-SQL Debugger This feature introduces the potential to debug Transact-SQL

scripts on instances of SQL Server 2005 Service Pack 2 (SP2) or later and enhances breakpoint functionality.

FIGURE 1-4 Leveraging the Transact-SQL code snippet template as a starting point when writing new

Transact-SQL statements in the SQL Server Database Engine Query Editor

¦ ¦ Resource Governor Enhancements Many organizations currently leverage Resource

Governor to gain predictable performance and improve their management of SQL Server

workloads and resources by implementing limits on resource consumption based on incoming

requests. In the past few years, customers have also been requesting additional improvements

to the Resource Governor feature. Customers wanted to increase the maximum number of

resource pools and support large-scale, multitenant database solutions with a higher level of

isolation between workloads. They also wanted predictable chargeback and vertical isolation

of machine resources.

The SQL Server product group responsible for the Resource Governor feature introduced

new capabilities to address the requests of its customers and the SQL Server community. To

begin, support for larger scale multitenancy can now be achieved on a single instance of SQL

Server because the number of resource pools Resource Governor supports increased from 20

to 64. In addition, a maximum cap for CPU usage has been introduced to enable predictable

chargeback

and isolation on the CPU. Finally, resource pools can be affinitized to an individual

schedule or a group of schedules for vertical isolation of machine resources.

A new Dynamic Management View (DMV) called sys.dm_resource_governor_resource_pool_

affinity improves database administrators’ success in tracking resource pool affinity.

Let’s review an example of some of the new Resource Governor features in action. In the

following

example, resource pool Pool25 is altered to be affinitized to six schedulers (8, 12,

13, 14, 15, and 16), and it’s guaranteed a minimum 5 percent of the CPU capacity of those

schedulers.

It can receive no more than 80 percent of the capacity of those schedulers. When

there is contention for CPU bandwidth,

the maximum average CPU bandwidth that will be

allocated is 40 percent.

ALTER RESOURCE POOL Pool25

WITH(

MIN_CPU_PERCENT = 5,

MAX_CPU_PERCENT = 40,

CAP_CPU_PERCENT = 80,

AFFINITY SCHEDULER = (8, 12 TO 16),

MIN_MEMORY_PERCENT = 5,

MAX_MEMORY_PERCENT = 15,

);

¦ ¦ Contained Databases Authentication associated with database portability was a challenge

in the previous versions of SQL Server. This was the result of users in a database being associated

with logins on the source instance of SQL Server. If the database ever moved to another

instance of SQL Server, the risk was that the login might not exist. With the introduction of

contained databases in SQL Server 2012, users are authenticated directly into a user database

without the dependency of logins in the Database Engine. This feature facilitates better

portability of user databases among servers because contained databases have no external

dependencies.

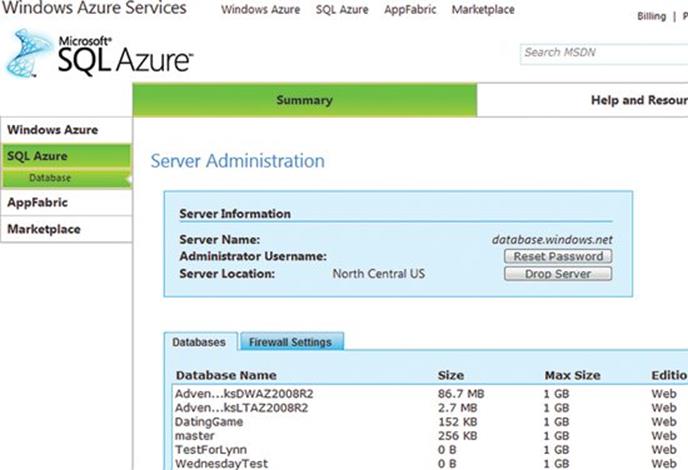

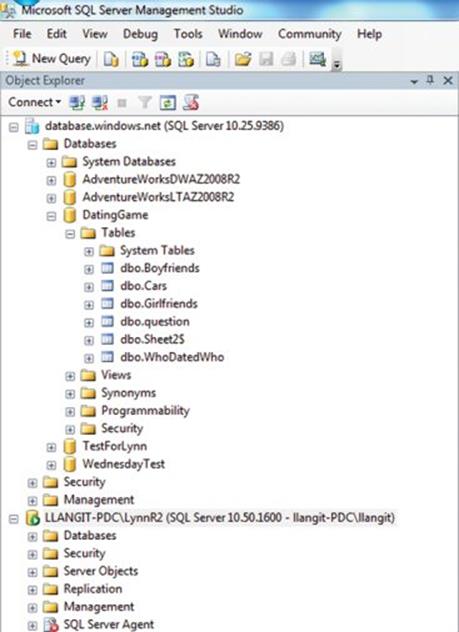

¦ ¦ Tight Integration with SQL Azure A new Deploy Database To SQL Azure wizard, pictured

in Figure 1-5, is integrated in the SQL Server Database Engine to help organizations deploy

an on-premise database to SQL Azure. Furthermore, new scenarios can be enabled with SQL

Azure Data Sync, which is a cloud service that provides bidirectional data synchronization

between databases across the datacenter and cloud.

FIGURE 1-5 Deploying a database to SQL Azure with the Deploy Database Wizard

¦ ¦ Startup Options Relocated Within SQL Server Configuration Manager, a new Startup

Parameters

tab was introduced for better manageability of the parameters required for

startup. A DBA can now easily specify startup parameters compared to previous versions of

SQL Server, which at times was a tedious task. The Startup Parameters tab can be invoked

by right-clicking a SQL Server instance name in SQL Server Configuration Manager and then

selecting Properties.

¦ ¦ Data-Tier Application (DAC) Enhancements SQL Server 2008 R2 introduced the concept

of data-tier applications. A data-tier application is a single unit of deployment containing

all of the database’s schema, dependent objects, and deployment requirements used by

an application.

SQL Server 2012 introduces a few enhancements to DAC. With the new SQL

Server, DAC upgrades are performed in an in-place fashion compared to the previous side-byside

upgrade process we’ve all grown accustomed to over the years. Moreover, DACs can be

deployed, imported and exported more easily across premises and public cloud environments,

such as SQL Azure. Finally, data-tier applications now support many more objects compared to

the previous SQL Server release.

Security Enhancements

It has been approximately 10 years since Microsoft initiated its trustworthy computing initiative. Since

then, SQL Server has had the best track record with the least amount of vulnerabilities and exposures

among the major database players in the industry. The graph shown in Figure 1-6 is from the National

Institute of Standards and Technology (Source: ITIC 2011: SQL Server Delivers Industry-Leading Security).

It shows common vulnerabilities and exposures reported from January 2002 to June 2010.

Oracle

0

50

100

150

200

250

300

350

DB2 MySQL SQL Server

FIGURE 1-6 Common vulnerabilities and exposures reported to NIST from January 2002 to January 2010

With SQL Server 2012, the product continues to expand on this solid foundation to deliver

enhanced

security and compliance within the database platform. For detailed information

of all the security enhancements associated with the Database Engine, review Chapter 4,

”

Security Enhancements.”

For now, here is a snapshot of some of the new enterprise-ready security

capabilities and controls that enable organizations to meet strict compliance policies and regulations:

¦ ¦ User-defined server roles for easier separation of duties

¦ ¦ Audit enhancements to improve compliance and resiliency

¦ ¦ Simplified security management, with a default schema for groups

¦ ¦ Contained Database Authentication, which provides database authentication that uses

self-

contained access information without the need for server logins

¦ ¦ SharePoint and Active Directory security models for higher data security in end-user reports

Programmability Enhancements

There has also been a tremendous investment in SQL Server 2012 regarding programmability.

Specifically,

there is support for “beyond relational” elements such as XML, Spatial, Documents, Digital

Media, Scientific Records, factoids, and other unstructured data types. Why such investments?

Organizations have demanded they be given a way to reduce the costs associated with managing

both structured and nonstructured data. They wanted to simplify the development of applications

over all data, and they wanted the management and search capabilities for all data improved. Take a

minute to review some of the SQL Server 2012 investments that positively impact programmability.

For more information associated with programmability and beyond relational elements, please review

Chapter 5, “Programmability and Beyond-Relational Enhancements.”

¦ ¦ FileTable Applications typically store data within a relational database engine; however, a

myriad of applications also maintain the data in unstructured formats, such as documents,

media files, and XML. Unstructured data usually resides on a file server and not directly in

a relational database such as SQL Server. As you can imagine, it becomes challenging for

organizations

to not only manage their structured and unstructured data across these disparate

systems, but to also keep them in sync. FileTable, a new capability in SQL Server 2012,

addresses these challenges. It builds on FILESTREAM technology that was first introduced

with SQL Server 2008. FileTable offers organizations Windows file namespace support and

application

compatibility with the file data stored in SQL Server. As an added bonus, when

applications

are allowed to integrate storage and data management within SQL Server, fulltext

and semantic search is achievable over unstructured and structured data.

¦ ¦ Statistical Semantic Search By introducing new semantic search functionality, SQL Server

2012 allows organizations to achieve deeper insight into unstructured data stored within the

Database Engine. Three new Transact-SQL rowset functions were introduced to query not only

the words in a document, but also the meaning of the document.

¦ ¦ Full-Text Search Enhancements Full-text search in SQL Server 2012 offers better query

performance and scale. It also introduces property-scoped searching functionality, which

allows

organizations the ability to search properties such as Author and Title without the need

Specialized Editions

Above and beyond the three main editions discussed earlier, SQL Server 2012 continues to deliver

specialized editions for organizations that have a unique set of requirements. Some examples include

the following:

¦ ¦ Developer The Developer edition includes all of the features and functionality found in the

Enterprise edition; however, it is meant strictly for the purpose of development, testing, and

demonstration. Note that you can transition a SQL Server Developer installation directly into

production by upgrading it to SQL Server 2012 Enterprise without reinstallation.

¦ ¦ Web Available at a much more affordable price than the Enterprise and Standard editions,

SQL Server 2012 Web is focused on service providers hosting Internet-facing web services

environments. Unlike the Express edition, this edition doesn’t have database size restrictions,

it supports four processors, and supports up to 64 GB of memory. SQL Server 2012 Web does

not offer the same premium features found in Enterprise and Standard editions, but it still

remains the ideal platform for hosting websites and web applications.

¦ ¦ Express This free edition is the best entry-level alternative for independent software

vendors, nonprofessional developers, and hobbyists building client applications. Individuals

learning about databases or learning how to build client applications will find that this edition

meets all their needs. This edition, in a nutshell, is limited to one processor and 1 GB of

memory, and it can have a maximum database size of 10 GB. Also, Express is integrated with

Microsoft Visual Studio.

Note Review “Features Supported by the Editions of SQL Server 2012” at

http://msdn.microsoft.com/en-us/library/cc645993(v=sql.110).aspx and

http://www.microsoft.com/sqlserver/en/us/future-editions/sql2012-editions.aspx for a

complete

comparison of the key capabilities of the different editions of SQL Server 2012.

SQL Server 2012 Licensing Overview

The licensing models affiliated with SQL Server 2012 have been both simplified to better align to

customer solutions and optimized for virtualization and cloud deployments. Organizations should

process knowledge of the information that follows. With SQL Server 2012, the licensing for computing

power is core-based and the Business Intelligence and Standard editions are available under

the Server + Client Access License (CAL) model. In addition, organizations can save on cloud-based

computing costs by licensing individual database virtual machines. Because each customer environment

is unique, we will not have the opportunity to provide an overview of how the license changes

affect your environment. For more information on the licensing changes and how they impact your

organization,

please contact your Microsoft representative or partner.

Software Component

Requirements

Windows PowerShell

Windows PowerShell 2.0

SQL Server support tools and

software

SQL Server 2012 – SQL Server Native Client

SQL Server 2012 – SQL Server Setup Support Files

Minimum: Windows Installer 4.5

Internet Explorer

Minimum: Windows Internet Explorer 7 or later version

Virtualization

Windows Server 2008 SP2 running Hyper-V role

or

Windows Server 2008 R2 SP1 running Hyper-V role

Note The server hardware has supported both 32-bit and 64-bit processors for several

years; however, Windows Server 2008 R2 is 64-bit only. Take this into serious consideration

when planning SQL Server 2012 deployments.

Installation, Upgrade, and Migration Strategies

Like its predecessors, SQL Server 2012 is available in both 32-bit and 64-bit editions. Both can be

installed

with either the SQL Server Installation Wizard through a command prompt or with Sysprep

for automated deployments with minimal administrator intervention. As mentioned earlier in the

chapter, SQL Server 2012 can now be installed on the Server Core, which is an installation option of

Windows Server 2008 R2 SP1 or later. Finally, database administrators also have the option to upgrade

an existing installation of SQL Server or conduct a side-by-side migration when installing SQL Server

2012. The following sections elaborate on the different strategies.

The In-Place Upgrade

An in-place upgrade is the upgrade of an existing SQL Server installation to SQL Server 2012.

When an in-place upgrade is conducted, the SQL Server 2012 setup program replaces the previous

SQL Server binaries with the new SQL Server 2012 binaries on the existing machine. SQL Server data is

automatically converted from the previous version to SQL Server 2012. This means data does not have

to be copied or migrated. In the example in Figure 1-8, a database administrator is conducting an

in-place upgrade on a SQL Server 2008 instance running on Server 1. When the upgrade is complete,

Server 1 still exists, but the SQL Server 2008 instance and all of its data is upgraded to SQL Server 2012.

Note SQL Server 2005 with SP4, SQL Server 2008 with SP2, and SQL Server 2008 R2 with

SP1 are all supported for an in-place upgrade to SQL Server 2012. Unfortunately, earlier

versions such as SQL Server 2000, SQL Server 7.0, and SQL Server 6.5 cannot be upgraded

to SQL Server 2012.

Side-by-Side Migration Pros and Cons

The greatest benefit of a side-by-side migration over an in-place upgrade is the opportunity to build

out a new database infrastructure on SQL Server 2012 and avoid potential migration issues that can

occur with an in-place upgrade. The side-by-side migration also provides more granular control

over the upgrade process because you can migrate databases and components independent of one

another. In addition, the legacy instance remains online during the migration process. All of these

advantages result in a more powerful server. Moreover, when two instances are running in parallel,

additional testing and verification can be conducted. Performing a rollback is also easy if a problem

arises during the migration.

However, there are disadvantages to the side-by-side strategy. Additional hardware might need to

be purchased. Applications might also need to be directed to the new SQL Server 2012 instance, and

it might not be a best practice for very large databases because of the duplicate amount of storage

required during the migration process.

SQL Server 2012 High-Level, Side-by-Side Strategy

The high-level, side-by-side migration strategy for upgrading to SQL Server 2012 consists of the following

steps:

1. Ensure the instance of SQL Server you plan to migrate meets the hardware and software

requirements for SQL Server 2012.

2. Review the deprecated and discontinued features in SQL Server 2012 by referring to

“Deprecated

Database Engine Features in SQL Server 2012″ at http://technet.microsoft.com

/en-us/library/ms143729(v=sql.110).aspx.

3. Although a legacy instance will not be upgraded to SQL Server 2012, it is still beneficial to

run the SQL Server 2012 Upgrade Advisor to ensure the data being migrated to the new SQL

Server 2012 is supported and there is no possibility of a break occurring after migration.

4. Procure the hardware, and install your operating system of choice. Windows Server 2012 is

recommended.

5. Install the SQL Server 2012 prerequisites and desired components.

6. Migrate objects from the legacy SQL Server to the new SQL Server 2012 database platform.

7. Point applications to the new SQL Server 2012 database platform.

8. Decommission legacy servers after the migration is complete.

21

CHAP TE R 2

High-Availability and Disaster-

Recovery Enhancements

Microsoft SQL Server 2012 delivers significant enhancements to well-known, critical capabilities

such as high availability (HA) and disaster recovery. These enhancements promise to assist

organizations

in achieving their highest level of confidence to date in their server environments.

Server

Core support,

breakthrough features such as AlwaysOn Availability Groups and active

secondaries,

and key improvements

to features such as failover clustering are improvements that

provide organizations a range of accommodating options to achieve maximum application availability

and data protection for SQL Server instances and databases within a datacenter and across

datacenters.

This chapter’s goal is to bring readers up to date with the high-availability and disaster-recovery

capabilities that are fully integrated into SQL Server 2012 as a result of Microsoft’s heavy investment

in AlwaysOn.

SQL Server AlwaysOn: A Flexible and Integrated Solution

Every organization’s success and service reputation is built on ensuring that its data is always

accessible

and protected. In the IT world, this means delivering a product that achieves the highest

level of availability and disaster recovery while minimizing data loss and downtime. With the

previous

versions of SQL Server, organizations achieved high availability and disaster recovery by

using technologies

such as failover clustering, database mirroring, log shipping, and peer-to-peer

replication.

Although organizations achieved great success with these solutions, they were tasked with

combining these native SQL Server technologies to achieve their business requirements related to

their Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

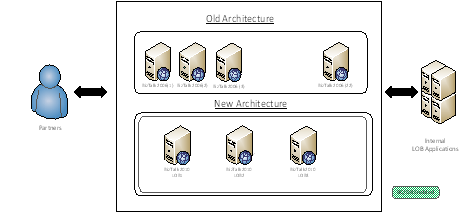

Figure 2-1 illustrates a common high-availability and disaster-recovery strategy used by

organizations

with the previous versions of SQL Server. This strategy includes failover clustering to

protect SQL Server instances within each datacenter, combined with asynchronous database mirroring

to provide disaster-recovery capabilities for mission-critical databases.

22 PART 1 Database Administration

Primary

Datacenter

Asynchronous Data Movement

with Database Mirroring

Secondary

Datacenter

SQL Server 2008 R2

Failover Cluster

SQL Server 2008 R2

Failover Cluster

FIGURE 2-1 Achieving high availability and disaster recovery by combining failover clustering with database

mirroring

in SQL Server 2008 R2

Likewise, for organizations that either required more than one secondary datacenter or that did

not have shared storage, their high-availability and disaster-recovery deployment incorporated

synchronous

database mirroring with a witness within the primary datacenter combined with log

shipping for moving data to multiple locations. This deployment strategy is illustrated in Figure 2-2.

Primary

Datacenter

Log Shipping

Disaster Recover

Datacenter 1

SQL Server 2008 R2

SQL Server 2008 R2

Database Mirroring

Witness

Disaster Recover

Datacenter 2

SQL Server 2008 R2

Synchronous Data Movement

with Database Mirroring

Log Shipping

Log Shipping

FIGURE 2-2 Achieving high availability and disaster recovery with database mirroring combined with log shipping

in SQL Server 2008 R2

CHAPTER 2 High-Availability and Disaster-Recovery Enhancements 23

Figures 2-1 and 2-2 both reveal successful solutions for achieving high availability and disaster

recovery. However, these solutions had some limitations, which warranted making changes. In addition,

with organizations constantly evolving, it was only a matter of time until they voiced their own

concerns and sent out a request for more options and changes.

One concern for many organizations was regarding database mirroring. Database mirroring is

a great way to protect databases; however, the solution is a one-to-one mapping, making multiple

secondaries unattainable. When confronted with this situation, many organizations reverted to log

shipping as a replacement for database mirroring because it supports multiple secondaries. Unfortunately,

organizations encountered limitations with log shipping because it did not provide zero data

loss or automatic failover capability. Concerns were also experienced by organizations working with

failover clustering because they felt that their shared-storage devices, such as a storage area network

(SAN), could be a single point of failure. Similarly, many organizations thought that from a cost

perspective their investments were not being used to their fullest potential. For example, the passive

servers in many of these solutions were idle. Finally, many organizations wanted to offload reporting

and maintenance tasks from the primary database servers, which was not an easy task to achieve.

SQL Server has evolved to answer many of these concerns, and this includes an integrated solution

called AlwaysOn. AlwaysOn Availability Groups and AlwaysOn Failover Cluster Instances are new

features,

introduced in SQL Server 2012, that are rich with options and promise the highest level of

availability and disaster recovery to its customers. At a high level, AlwaysOn Availability Groups is

used for database protection and offers multidatabase failover, multiple secondaries, active secondaries,

and integrated HA management. On the other hand, AlwaysOn Failover Cluster Instances is a

feature tailored to instance-level protection, multisite clustering, and consolidation, while consistently

providing flexible failover polices and improved diagnostics.

AlwaysOn Availability Groups

AlwaysOn Availability Groups provides an enterprise-level alternative to database mirroring, and it

gives organizations the ability to automatically or manually fail over a group of databases as a single

unit, with support for up to four secondaries. The solution provides zero-data-loss protection and is

flexible. It can be deployed on local storage or shared storage, and it supports both synchronous and

asynchronous data movement. The application failover is very fast, it supports automatic page repair,

and the secondary replicas can be leveraged to offload reporting and a number of maintenance

tasks, such as backups.

Take a look at Figure 2-3, which illustrates an AlwaysOn Availability Groups deployment strategy

that includes one primary replica and three secondary replicas.

70%

50%

25%

15%

70%

50%

25%

15%

Primary

Datacenter

Replica2

Replica3

Reports

Reports Backups

Backups

Secondary

Datacenter

Synchronous Data Movement

Asynchronous Data Movement

Replica4

A

A

A

A

A

Secondary Replica

Primary Replica

A

SQL Server

SQL Server

SQL Server

SQL Server

Replica1

FIGURE 2-3 Achieving high availability and disaster recovery with AlwaysOn Availability Groups

In this figure, synchronous data movement is used to provide high availability within the primary

datacenter and asynchronous data movement is used to provide disaster recovery. Moreover, secondary

replica 3 and replica 4 are employed to offload reports and backups from the primary replica.

It is now time to take a deeper dive into AlwaysOn Availability Groups through a review of the new

concepts and terminology associated with this breakthrough capability.

Understanding Concepts and Terminology

Availability groups are built on top of Windows Failover Clustering and support both shared and

nonshared

storage. Depending on an organization’s RPO and RTO requirements, availability groups

can use either an asynchronous-commit availability mode or a synchronous-commit availability

mode to move data between primary and secondary replicas. Availability groups include built-in

compression

and encryption as well as support for file-stream replication and auto page repair.

Failover between replicas is either automatic or manual.

When deploying AlwaysOn Availability Groups, your first step is to deploy a Windows Failover

Cluster. This is completed by using the Failover Cluster Manager Snap-in within Windows Server 2008

R2. Once the Windows Failover Cluster is formed, the remainder of the Availability Groups configurations

is completed in SQL Server Management Studio. When you use the Availability Groups wizards

to configure your availability groups, SQL Server Management Studio automatically creates the

appropriate

services, applications, and resources in Failover Cluster Manager; hence, the deployment

is much easier for database administrators who are not familiar with failover clustering.

Now that the fundamentals of the AlwaysOn Availability Groups have been laid down, the most

natural question that follows is how an organization’s operations are enhanced with this feature.

Unlike

database mirroring, which supports only one secondary, AlwaysOn Availability Groups supports

one primary replica and up to four secondary replicas. Availability groups can also contain more

than one availability database. Equally appealing is the fact you can host more than one availability

group within an implementation. As a result, it is possible to group databases with application

dependencies

together within an availability group and have all the availability databases seamlessly

fail over as a single cohesive unit as depicted in Figure 2-4.

FIGURE 2-4 Dedicated availability groups for Finance and HR availability databases

In addition, as shown in Figure 2-4, there is one primary replica and two secondary replicas with

two availability groups. One of these availability groups is called Finance, and it includes all the

Finance databases; the other availability group is called HR, and it includes all the Human Resources

databases. The Finance availability group can fail over independently of the HR availability group,

and unlike database mirroring, all availability databases within an availability group fail over as a

single unit. Moreover, organizations can improve their IT efficiency, increase performance, and reduce

total cost of ownership with better resource utilization of secondary/passive hardware because these

secondary replicas can be leveraged for backups and read-only operations such as reporting and

maintenance. This is covered in the “Active Secondaries” section later in this chapter.

Now that you have been introduced to some of the benefits AlwaysOn Availability Groups offers

for an organization, let’s take the time to get a stronger understanding of the AlwaysOn Availability

Groups concepts and how this new capability operates. The concepts covered include the following:

¦ ¦ Availability replica roles

¦ ¦ Data synchronization modes

¦ ¦ Failover modes

¦ ¦ Connection mode in secondaries

¦ ¦ Availability group listeners

Availability Replica Roles

Each AlwaysOn availability group comprises a set of two or more failover partners that are referred to

as availability replicas. The availability replicas can consist of either a primary role or a secondary role.

Note that there can be a maximum of four secondaries, and of these four secondaries only a maximum

of two secondaries can be configured to use the synchronous-commit availability mode.

The roles affiliated with the availability replicas in AlwaysOn Availability Groups follow the same

principles as the legendary Sith rule of two doctrines in the Star Wars saga. In Star Wars, there can be

only two Siths at one time, a master and an apprentice. Similarly, a SQL Server instance in an availability

group can be only a primary replica or a secondary replica. At no time can it be both because the

role swapping is controlled by Windows Server Failover Cluster (WSFC).

Each of the SQL Server instances in the availability group is hosted on either a SQL Server failover

cluster instance (FCI) or a stand-alone instance of SQL Server 2012. Each of these instances resides on

different nodes of a WSFC. WSFC is typically used for providing high availability and disaster recovery

for well-known Microsoft products. As such, availability groups use WSFC as the underlying mechanism

to provide internode health detection, failover coordination, primary health detection, and

distributed change notifications for the solution.

Each availability replica hosts a copy of the availability databases in the availability group. Because

there are multiple copies of the databases being hosted on each availability replica, there isn’t a

prerequisite for using shared storage like there was in the past when deploying traditional SQL Server

failover clusters. On the flip side, when using nonshared storage, an organization must realize that

storage requirements increase as the number of replicas it plans on hosting increases.

Data Synchronization Modes

To move data from the primary replica to the secondary replica, each mode uses either

synchronous-

commit availability mode or asynchronous-commit availability mode. Give

consideration

to the following items when selecting either option:

¦ ¦ When you use the synchronous-commit mode, a transaction is committed on both replicas

to guarantee transactional consistency. This, however, means increased latency. As such, this

option might not be appropriate for partners who don’t share a high-speed network or who

reside in different geographical locations.

¦ ¦ The asynchronous-commit mode commits transactions between partners without waiting for

the partner to write the log to disk. This maximizes performance between the application and

the primary replica and is well suited for disaster-recovery solutions.

Availability Groups Failover Modes

When configuring AlwaysOn availability groups, database administrators can choose from two

failover modes when swapping roles from the primary to the secondary replicas. For administrators

who are familiar with database mirroring, you’ll see that to obtain high availability and disaster

recovery the failover modes are very similar to the modes in database mirroring. These are the two

AlwaysOn failover modes available when using the New Availability Group Wizard:

¦ ¦ Automatic Failover This replica uses synchronous-commit availability mode, and it supports

both automatic failover and manual failover between the replica partners. A maximum of two

failover replica partners are supported when choosing automatic failover.

¦ ¦ Manual Failover This replica uses synchronous or asynchronous commit availability mode

and supports only manual failovers between the replica partners.

Connection Mode in Secondaries

As indicated earlier, each of the secondaries can be configured to support read-only access for

reporting

or other maintenance tasks, such as backups. During the final configuration stage of

AlwaysOn

availability groups, database administrators decide on the connection mode for the

secondary

replicas. There are three connection modes available:

¦ ¦ Disallow connections In the secondary role, this availability replica does not allow any connections.

¦ ¦ Allow only read-intent connections In the secondary role, this availability replica allows

only read-intent connections.

¦ ¦ Allow all connections In the secondary role, this availability replica allows all connections

for read access, including connections running with older clients.

Availability Group Listeners

The availability group listener provides a way of connecting to databases within an availability group

via a virtual network name that is bound to the primary replica. Applications can specify the network

name affiliated with the availability group listener in connection strings. After the availability group

fails over from the primary replica to a secondary replica, the network name directs connections to

the new primary replica. The availability group listener concept is similar to a Virtual SQL Server Name

when using failover clustering; however, with an availability group listener, there is a virtual network

name for each availability group, whereas with SQL Server failover clustering, there is one virtual

network name for the instance.

You can specify your availability group listener preferences when using the Create A New

Availability

Group Wizard in SQL Server Management Studio, or you can manually create or modify

an availability group listener after the availability group is created. Alternatively, you can use

Transact-

SQL to create or modify the listener too. Notice in Figure 2-5 that each availability group

listener requires a DNS name, an IP address, and a port such as 1433. Once the availability group

listener is created, a server name and an IP address cluster resource are automatically created within

Failover Cluster Manager. This is certainly a testimony to the availability group’s flexibility and tight

integration with SQL Server, because the majority of the configurations are done within SQL Server.

FIGURE 2-5 Specifying availability group listener properties

Be aware that there is a one-to-one mapping between availability group listeners and availability

groups. This means you can create one availability group listener for each availability group. However,

if more than one availability group exists within a replica, you can have more than one availability

group listener. For example, there are two availability groups shown in Figure 2-6: one is for

the Finance

availability databases, and the other is for the Accounting availability databases. Each

availability

group has its own availability group listener that clients and applications connect to.

FIGURE 2-6 Illustrating two availability group listeners within a replica

Configuring Availability Groups

When creating a new availability group, a database administrator needs to specify an availability

group name, such as AvailablityGroupFinance, and then select one or more databases to be part of

in the availability group. The next step involves first specifying one or more instances of SQL Server

to host secondary availability replicas, and then specifying your availability group listener preference.

The final step is selecting the data-synchronization preference and connection mode for the secondary

replicas. These configurations are conducted with the New Availability Group Wizard or with

Transact-SQL PowerShell scripts.

Prerequisites

To deploy AlwaysOn Availability Groups, the following prerequisites must be met:

¦ ¦ All computers running SQL Server, including the servers that will reside in the disaster-recovery

site, must reside in the same Windows-based domain.

¦ ¦ All SQL Server computers must participate in a single Windows Server failover cluster even if

the servers reside in multiple sites.

¦ ¦ All servers must partake in a Windows Server failover cluster.

¦ ¦ AlwaysOn Availability Groups must be enabled on each server.

¦ ¦ All the databases must be in full recovery mode.

¦ ¦ A full backup must be conducted on all databases before deployment.

¦ ¦ The server cannot host the Active Directory Domain Services role.

Deployment Examples

Figure 2-7 shows the Specify Replicas page you see when using the New Availability Group Wizard.

In this example, there are three SQL Server instances in the availability group called Finance:

SQL01\Instance01, SQL02\Instance01, and SQL03\Instance01. SQL01\Instance01 is configured as the

Primary replica, whereas SQL02\Instance01 and SQL03\Instance01 are configured as secondaries.

SQL01\Instance01 and SQL02\Instance01 support automatic failover with synchronous data movement,

whereas SQL-03\Instance01 uses asynchronous-commit availability mode and supports only

a forced failover. Finally, SQL01\Instance01 does not allow read-only connections to the secondary,

whereas SQL02\Instance01 and SQL03\Instance01 allow read-intent connections to the secondary. In

addition, for this example, SQL01\Instance01 and SQL02\Instance01 reside in a primary datacenter for

high availability within a site, and SQL03\Instance01 resides in the disaster recovery datacenter and

will be brought online manually in the event the primary datacenter becomes unavailable.

FIGURE 2-7 Specifying the SQL Server instances in the availability group

One thing becomes vividly clear from Figure 2-7 and the preceding example: there are many

different

deployment configurations available to satisfy any organization’s high-availability and

disaster-recovery requirements. See Figure 2-8 for the following additional deployment alternatives:

¦ ¦ Nonshared storage, local, regional, and geo target

¦ ¦ Multisite cluster with another cluster as the disaster recovery (DR) target

¦ ¦ Three-node cluster with similar DR target

¦ ¦ Secondary targets for backup, reporting, and DR

Nonshared Storage, Local,

Regional, and Geo Target

A

A

A

A

A

A

A A

A

A

A

A A A

A

A

A

A A

A

A

A A A

Multisite Cluster with Another

Cluster as Disaster Recovery Target

Three-Node Cluster with Similar

Disaster Recovery Target

Secondary Targets for Backup,

Reporting, and Disaster Recovery

FIGURE 2-8 Additional AlwaysOn deployment alternatives

Monitoring Availability Groups with the Dashboard

Administrators have an opportunity to leverage a new and remarkably intuitive manageability

dashboard in in SQL Server 2012 to monitor availability groups. The dashboard, shown in Figure 2-9,

reports the health and status associated with each instance and availability database in the availability

group. Moreover, the dashboard displays the specific replica role of each instance and provides synchronization

status. If there is an issue or if more information on a specific event is required, a database

administrator can click the availability group state, server instance name, or health status hyperlinks

for additional information. The dashboard is launched by right-clicking the Availability Groups

folder in the Object Explorer in SQL Server Management Studio and selecting Show Dashboard.

FIGURE 2-9 Monitoring availability groups with the new Availability Group dashboard

Active Secondaries

As indicated earlier, many organizations communicated to the SQL Server team their need to improve

IT efficiency by optimizing their existing hardware investments. Specifically, organizations hoped their

production systems for passive workloads could be used in some other capacity instead of remaining

in an idle state. These same organizations also wanted reporting and maintenance tasks offloaded

from production servers because these tasks negatively affected production workloads. With SQL

Server 2012, organizations can leverage the AlwaysOn Availability Group capability to configure

secondary replicas, also referred to as active secondaries, to provide read-only access to databases

affiliated with an availability group.

All read-only operations on the secondary replicas are supported by row versioning and are

automatically

mapped to snapshot isolation transaction level, which eliminates reader/writer

contention.

In addition, the data in the secondary replicas is near real time. In many circumstances,

data latency between the primary and secondary databases should be within seconds. Note that the

latency of log synchronization affects data freshness.

For organizations, active secondaries are synonymous with performance optimization on a primary

replica and increases to overall IT efficiency and hardware utilization.

Read-Only Access to Secondary Replicas

Recall that when configuring the connection mode for secondary replicas, you can choose Disallow

Connections, Allow Only Read-Intent Connections, and Allow All Connections. The Allow Only

Read-

Intent Connections and Allow All Connections options both provide read-only access to

secondary

replicas. The Disallow Connections alternative does not allow read-only access as implied

by its name.

Now let’s look at the major differences between Allow Only Read-Intent Connections and Allow

All Connections. The Allow Only Read-Intent Connections option allows connections to the databases

in the secondary replica when the Application Intent Connection property is set to Read-only in the

SQL Server native client. When using the Allow All Connections settings, all client connections are

allowed independent of the Application Intent property. What is the Application Intent property in

the connection string? The Application Intent property declares the application workload type when

connecting

to a server. The possible values are Read-only and Read Write. Commands that try to

create

or modify data on the secondary replica will fail.

Backups on Secondary

Backups of availability databases participating in availability groups can be conducted on any of the

replicas. Although backups are still supported on the primary replica, log backups can be conducted

on any of the secondaries. Note that this is independent of the replication commit mode being

used.synchronous-commit or asynchronous-commit. Log backups completed on all replicas form a

single log chain, as shown in Figure 2-10.

Primary Replica Backups

Backups Supported

If Desired

Secondary Replica 2 Backups

Step 2: Log Backup

LSN 41-60

Secondary Replica 1 Backups

Step 1: Log Backup

LSN 21-40

Secondary Replica 3 Backups

Step 3: Log Backup

LSN 61-80

FIGURE 2-10 Forming a single log chain by backing up the transaction logs on multiple secondary replicas

As a result, the transaction log backups do not all have to be performed on the same replica.

This in no way means that serious thought should not be given to the location of your backups. It is

recommended that you store all backups in a central location because all transaction log backups are

required to perform a restore in the event of a disaster. Therefore, if a server is no longer available

and it contained the backups, you will be negatively affected. In the event of a failure, use the new

Database Recovery Advisor Wizard; it provides many benefits when conducting restores. For example,

if you are performing backups on different secondaries, the wizard generates a visual image of a

chronological timeline by stitching together all of the log files based on the Log Sequence Number

(LSN).

AlwaysOn Failover Cluster Instances

You’ve seen the results of the development efforts in engineering the new AlwaysOn Availability

Groups capability for high availability and disaster recovery, and the creation of active secondaries.

Now you’ll explore the significant enhancements to traditional capabilities such as SQL Server failover

clustering that leverage shared storage. The following list itemizes some of the improvements that

will appeal to database administrators looking to gain high availability for their SQL Server instances.

Specifically, this section discusses the following features:

¦ ¦ Multisubnet Clustering This feature provides a disaster-recovery solution in addition to

high availability with new support for multisubnet failover clustering.

¦ ¦ Support for TempDB on Local Disk Another storage-level enhancement with failover

clustering is associated with TempDB. TempDB no longer has to reside on shared storage as

it did in previous versions of SQL Server. It is now supported on local disks, which results in

many practical benefits for organizations. For example, you can now offload TempDB I/O from

shared-storage devices (SSD) like a SAN and leverage fast SSD storage locally within the server

nodes to optimize TempDB workloads, which are typically random I/O.

¦ ¦ Flexible Failover Policy SQL Server 2012 introduces improved failure detection for the SQL

Server failover cluster instance by adding failure condition-level properties that allow you to

configure a more flexible failover policy.

Note AlwaysOn failover cluster instances can be combined with availability groups to offer

maximum SQL Server instance and database protection.

With the release of Windows Server 2008, new functionality enabled cluster nodes to be

connected

over different subnets without the need for a stretch virtual local area network (VLAN)

across networks. The nodes could reside on different subnets within a datacenter or in another

geographical

location, such as a disaster recovery site. This concept is commonly referred to as

multisite

clustering, multisubnet clustering, or stretch clustering. Unfortunately, the previous versions

of SQL Server could not take advantage of this Windows failover clustering feature. Organizations

that wanted to create either a multisite or multisubnet SQL Server failover cluster still had to create

a stretch VLAN to expose a single IP address for failover across sites. This was a complex and challenging

task for many organizations. This is no longer the case because SQL Server 2012 supports

multisubnet

and multisite clustering out of the box; therefore, the need for implementing stretch

VLAN technology no longer exists.

Figure 2-11 illustrates an example of a SQL Server multisubnet failover cluster between two

subnets

spanning two sites. Notice how each node affiliated with the multisubnet failover cluster

resides on a different subnet. Node 1 is located in Site 1 and resides on the 192.168.115.0/24 subnet,

whereas Node 2 is located in Site 2 and resides on the 192.168.116.0/24 subnet. The DNS IP address

associated with the virtual network name of the SQL Server cluster is automatically updated when a

failover from one subnet to another subnet occurs.

SQL Server 2012

Node 1

192.168.115.0/24 Subnet

SQL Server 2012

Node 2

192.168.116.0/24 Subnet

SQL Server Failover Cluster Instance

Node 1 – 192.168.115.5

Node 2 – 192.168.116.5

Data Replication Between Storage Systems

Failover

Site 1 Site 2

FIGURE 2-11 A multisubnet failover cluster instance example

For clients and applications to connect to the SQL Server failover cluster, they need two IP

addresses

registered to the SQL Server failover cluster resource name in WSFC. For example, imagine

your server name is SQLFCI01 and the IP addresses are 192.168.115.5 and 192.168.116.5. WSFC automatically

controls the failover and brings the appropriate IP address online depending on the node

that currently owns the SQL Server resource. Again, if Node 1 is affiliated with the 192.168.115.0/24

subnet and owns the SQL Server failover cluster, the IP address resource 192.168.115.6 is brought

online

as shown in Figure 2-12. Similarly, if a failover occurs and Node 2 owns the SQL Server

resource,

IP address resource 192.168.115.6 is taken offline and the IP address resource 192.168.116.6

is brought online.

FIGURE 2-12 Multiple IP addresses affiliated with a multisubnet failover cluster instance

Because there are multiple IP addresses affiliated with the SQL Server failover cluster instance

virtual name, the online address changes automatically when there is a failover. In addition,

Windows

failover cluster issues a DNS update immediately after the network name resource name

comes online.

The IP address change in DNS might not take effect on clients because of cache

settings; therefore, it is recommended that you minimize the client downtime by configuring the

HostRecordTTL

in DNS to 60 seconds. Consult with your DNS administrator before making any DNS

changes, because additional load requests could occur when tuning the TTL time with a host record.

Support for Deploying SQL Server 2012 on Windows Server

Core

Windows Server Core was originally introduced with Windows Server 2008 and saw significant

enhancements

with the release of Windows Server 2008 R2. For those who are unfamiliar with Server

Core, it is an installation option for the Windows Server 2008 and Windows Server 2008 R2 operating

systems. Because Server Core is a minimal deployment of Windows, it is much more secure because

its attack surface is greatly reduced. Server Core does not include a traditional Windows graphical

interface and, therefore, is managed via a command prompt or by remote administration tools.

Unfortunately, previous versions of SQL Server did not support the Server Core operating system,

but that has all changed. For the first time, Microsoft SQL Server 2012 supports Server Core installations

for organizations running Server Core based on Windows Server 2008 R2 with Service Pack 1 or

later.

Why is Server Core so important to SQL Server, and how does it positively affect availability?

When you are running SQL Server 2012 on Server Core, operating-system patching is drastically

reduced.by up to 60 percent. This translates to higher availability and a reduction in planned

downtime for any organization’s mission-critical databases and workloads. In addition, surface-area

attacks are greatly reduced and overall security of the database platform is strengthened, which again

translates to maximum availability and data protection.

When first introduced, Server Core required the use and knowledge of command-line syntax to

manage it. Most IT professionals at this time were accustomed to using a graphical user interface

(GUI) to manage and configure Windows, so they had a difficult time embracing Server Core. This

affected its popularity and, ultimately, its implementation. To ease these challenges, Microsoft created

SCONFIG, which is an out-of-the-box utility that was introduced with the release of Windows Server

2008 R2 to dramatically ease server configurations. To navigate through the SCONFIG options, you

only need to type one or more numbers to configure server properties, as displayed in Figure 2-13.

FIGURE 2-13 SCONFIG utility for configuring server properties in Server Core

The following sections articulate the SQL Server 2012 prerequisites for Server Core, SQL Server

features supported on Server Core, and the installation alternatives.

SQL Server 2012 Prerequisites for Server Core

Organizations installing SQL Server 2012 on Windows Server 2008 R2 Server Core must meet the

following

operating system, features, and components prerequisites.

The operating system requirements are as follows:

¦ ¦ Windows Server 2008 R2 SP1 64-bit x64 Data Center Server Core

¦ ¦ Windows Server 2008 R2 SP1 64-bit x64 Enterprise Server Core

¦ ¦ Windows Server 2008 R2 SP1 64-bit x64 Standard Server Core

¦ ¦ Windows Server 2008 R2 SP1 64-bit x64 Web Server Core

Here is the list of features and components:

¦ ¦ .NET Framework 2.0 SP2

¦ ¦ .NET Framework 3.5 SP1 Full Profile

¦ ¦ .NET Framework 4 Server Core Profile

¦ ¦ Windows Installer 4.5

¦ ¦ Windows PowerShell 2.0

Once you have all the prerequisites, it important to become familiar with the SQL Server

components

supported on Server Core.

SQL Server Features Supported on Server Core

There are numerous SQL Server features that are fully supported on Server Core. They include

Database

Engine Services, SQL Server Replication, Full Text Search, Analysis Services, Client Tools

Connectivity, and Integration Services. Likewise, Sever Core does not support many other features,

including

Reporting Services, Business Intelligence Development Studio, Client Tools Backward

Compatibility, Client Tools SDK, SQL Server Books Online, Distributed Replay Controller, SQL Client

Connectivity SDK, Master Data Services, and Data Quality Services. Some features such as Management

Tools – Basic, Management Tools – Complete, Distributed Replay Client, and Microsoft Sync

Framework are supported only remotely. Therefore, these features can be installed on editions of the

Windows operating system that are not Server Core, and then used to remotely connect to a SQL

Server instance running on Server Core. For a full list of supported and unsupported features review

the information at this link: http://msdn.microsoft.com/en-us/library/hh231669(SQL.110).aspx.

Note To leverage Server Core, you need to plan your SQL Server installation ahead of

time. Give yourself the opportunity to fully understand which SQL Server features are

required

to support your mission-critical workloads.

SQL Server on Server Core Installation Alternatives

The typical SQL Server Installation Setup Wizard is not supported when installing SQL Server 2012 on

Server Core. As a result, you need to automate the installation process by either using a commandline

installation, using a configuration file, or leveraging the DefaultSetup.ini methodology. Details

and examples for each of these methods can be found in Books Online: http://technet.microsoft.com

/en-us/library/ms144259(SQL.110).aspx.

Note When installing SQL Server 2012 on Server Core, ensure that you use Full Quiet

mode by using the /Q parameter or Quiet Simple mode by using the /QS parameter.

Additional High-Availability and Disaster-Recovery

Enhancements

This section summarizes some of the additional high-availability and disaster recovery enhancements

found in SQL Server 2012.

Support for Server Message Block

A common trend for organizations in recent years has been the movement toward consolidating

databases and applications onto fewer servers.specifically, hosting many instances of SQL Server

running on a failover cluster. When using failover clustering for consolidation, the previous versions of

SQL Server required a single drive letter for each SQL Server failover cluster instance. Because there

are only 23 drive letters available, without taking into account reservations, the maximum amount of

SQL Server instances supported on a single failover cluster was 23. Twenty-three instances sounds like

an ample amount; however, the drive letter limitation negatively affects organizations running powerful

servers that have the compute and memory resources to host more than 23 instances on a single

server. Going forward, SQL Server 2012 and failover clustering introduces support for Server Message

Block (SMB).

Note You might be thinking you can use mount points to alleviate the drive-letter pain

point. When working with previous versions of SQL Server, even with mount points, you

need at least one drive letter for each SQL Server failover cluster instance.

Some of the SQL Server 2012 benefits brought about by SMB are, of course, database-storage

consolidation and the potential to support more than 23 clustering instances in a single WSFC. To

take advantage of these features, the file servers must be running Windows Server 2008 or later

versions

of the operating system.

Database Recovery Advisor

The Database Recovery Advisor is a new feature aimed at optimizing the restore experience for

database

administrators conducting database recovery tasks. This tool includes a new timeline feature

that provides a visualization of the backup history, as shown in Figure 2-14.

FIGURE 2-14 Database Recovery Advisor backup and restore visual timeline

Online Operations

SQL Server 2012 also includes a few enhancements for online operation that reduce downtime during

planned maintenance operations. Line-of-business (LOB) re-indexing and adding columns with

defaults

are now supported.

Rolling Upgrade and Patch Management

All of the new AlwaysOn capabilities reduce application downtime to only a single manual failover

by supporting rolling upgrades and patching of SQL Server. This means a database administrator

can apply

a service pack or critical fix to the passive node or nodes if using a failover cluster or to

secondary

replicas if using availability groups. Once the installation is complete on all passive nodes

or secondaries, a database administrator can conduct a manual failover and then apply the service

pack or critical fix to the node in an FCI or replica. This rolling strategy also applies when upgrading

the database platform.

41

CHAP TE R 3

Performance and Scalability

Microsoft SQL Server 2012 introduces a new index type called columnstore. The columnstore

index feature was originally referred to as project Apollo during the development phases of SQL

Server 2012 and during the distribution of the Community Technology Preview (CTP) releases of the

product. This new index combined with the advanced query-processing enhancements offer blazingfast